Tencent AI Researchers Introduce Hunyuan-T1: A Mamba-Powered Ultra-Large Language Model Redefining Deep Reasoning, Contextual Efficiency, and Human-Centric Reinforcement Learning

Large language models struggle to process and reason over lengthy, complex texts without losing essential context. Traditional models often suffer from context loss, inefficient handling of long-range dependencies, and difficulties aligning with human preferences, affecting the accuracy and efficiency of their responses. Tencent’s Hunyuan-T1 directly tackles these challenges by integrating a novel Mamba-powered architecture with advanced reinforcement learning and curriculum strategies, ensuring robust context capture and enhanced reasoning capabilities.

Hunyuan-T1 is the first model powered by the innovative Mamba architecture, a design that fuses Hybrid Transformer and Mixture-of-Experts (MoE) technologies. Built on the TurboS fast-thinking base, Hunyuan-T1 is specifically engineered to optimize the processing of long textual sequences while minimizing computational overhead. This allows the model to effectively capture extended context and manage long-distance dependencies, crucial for tasks that demand deep, coherent reasoning.

A key highlight of Hunyuan-T1 is its heavy reliance on RL during the post-training phase. Tencent dedicated 96.7% of its computing power to this approach, enabling the model to refine its reasoning abilities iteratively. Techniques such as data replay, periodic policy resetting, and self-rewarding feedback loops help improve output quality, ensuring the model’s responses are detailed, efficient, and closely aligned with human expectations.

To further boost reasoning proficiency, Tencent employed a curriculum learning strategy. This approach gradually increases the difficulty of training data while simultaneously expanding the model’s context length. As a result, Hunyuan-T1 is trained to use tokens more efficiently, seamlessly adapting from solving basic mathematical problems to tackling complex scientific and logical challenges. Efficiency is another cornerstone of Hunyuan-T1’s design. The TurboS base’s ability to capture long-text information prevents context loss, a common issue in many language models, and doubles the decoding speed compared to similar systems. This breakthrough means that users benefit from faster, higher-quality responses without compromising performance.

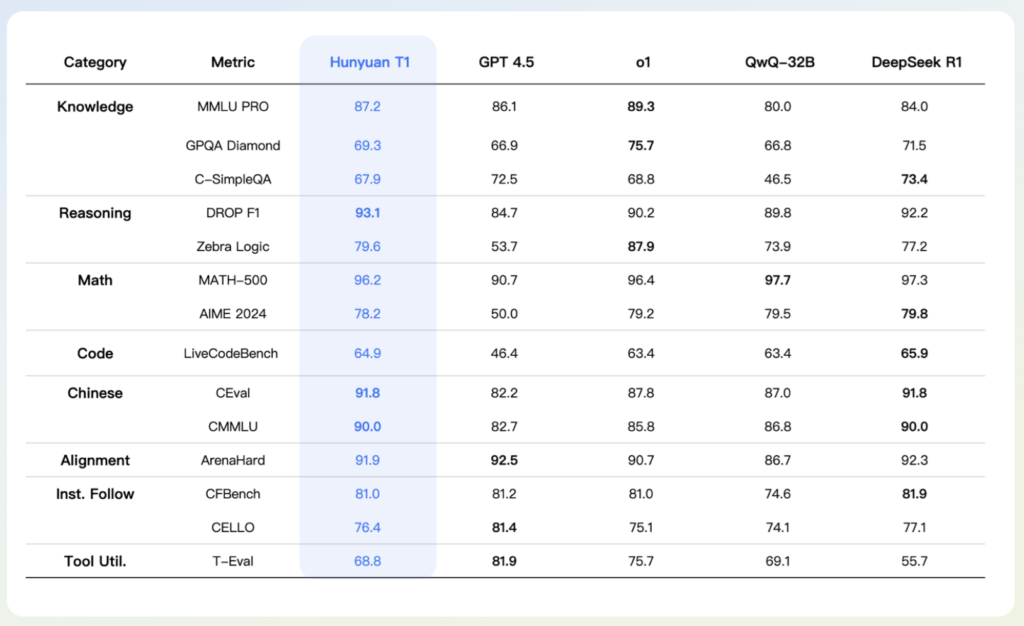

The model has achieved impressive scores on multiple benchmarks: 87.2 on MMLU-PRO, which tests various subjects including humanities, social sciences, and STEM fields; 69.3 on GPQA-diamond, a challenging evaluation featuring doctoral-level scientific problems; 64.9 on LiveCodeBench for coding tasks; and a remarkable 96.2 on the MATH-500 benchmark for mathematical reasoning. These results underscore Hunyuan-T1’s versatility and ability to handle high-stakes, professional-grade tasks across various fields. Beyond quantitative metrics, Hunyuan-T1 is designed to deliver outputs with human-like understanding and creativity. During its RL phase, the model underwent a comprehensive alignment process that combined self-rewarding feedback with external reward models. This dual approach ensures its responses are accurate and exhibit rich details and natural flow.

In conclusion, Tencent’s Hunyuan-T1 combines an ultra-large-scale, Mamba-powered architecture with state-of-the-art reinforcement learning and curriculum strategies. Hunyuan-T1 delivers high performance, enhanced reasoning, and exceptional efficiency.

Check out the Details, Hugging Face and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.